I can't stop thinking about Throbol

This post is going to be more “stream of consciousness” than my other writings. This is noteworthy as I haven’t really felt the need to write about something that wasn’t a presentation of some form. Up to this point, all of my posts have been of form “Look at this milestone in this project” or “Here is a trick I figured out” and I’ve enjoyed having the value of my writing derived from the milestone itself. For example, writing about implementing LQR is self-evident in its value. The rambling that follows here? Not so much.

The driver for the change of pace is that I have had some thoughts floating around my head, and wanted to see if I could bring some order to them through writing them down. In order to achieve that, I would like to discuss:

- Throbol

- My motivation for ORNIS

- General complaints about working with C++

- Thoughts on observability and ROS2

Throbol?

A few months ago, I was grabbed by a Post on Hackernews about a “Robot oriented Language”. I was amazed equally at the Information density immediately visible, and at the thunderous silence that it received (Only 3 comments? Absolutely insane…).

I strongly encourage the reader to quickly check out the Throbol Website, and some of the examples before continuing. Suffice to say, it left an impact on me, and I’ve been pondering it ever since!

I cannot claim to understand the motivations Trevor Blackwell had for developing Throbol, but that won’t stop me from engaging in some good ol’ extrapolation (projection). I have only done some light reading on his background, so everything I say about why it was developed, and my guesses on the pains it aims to resolve is pure conjecture. Reading through his TODO.md, I can see references to some fascinating concepts: REPL driven development, Temporal Programming etc. There has clearly been some thought put into this.

Whatbol?

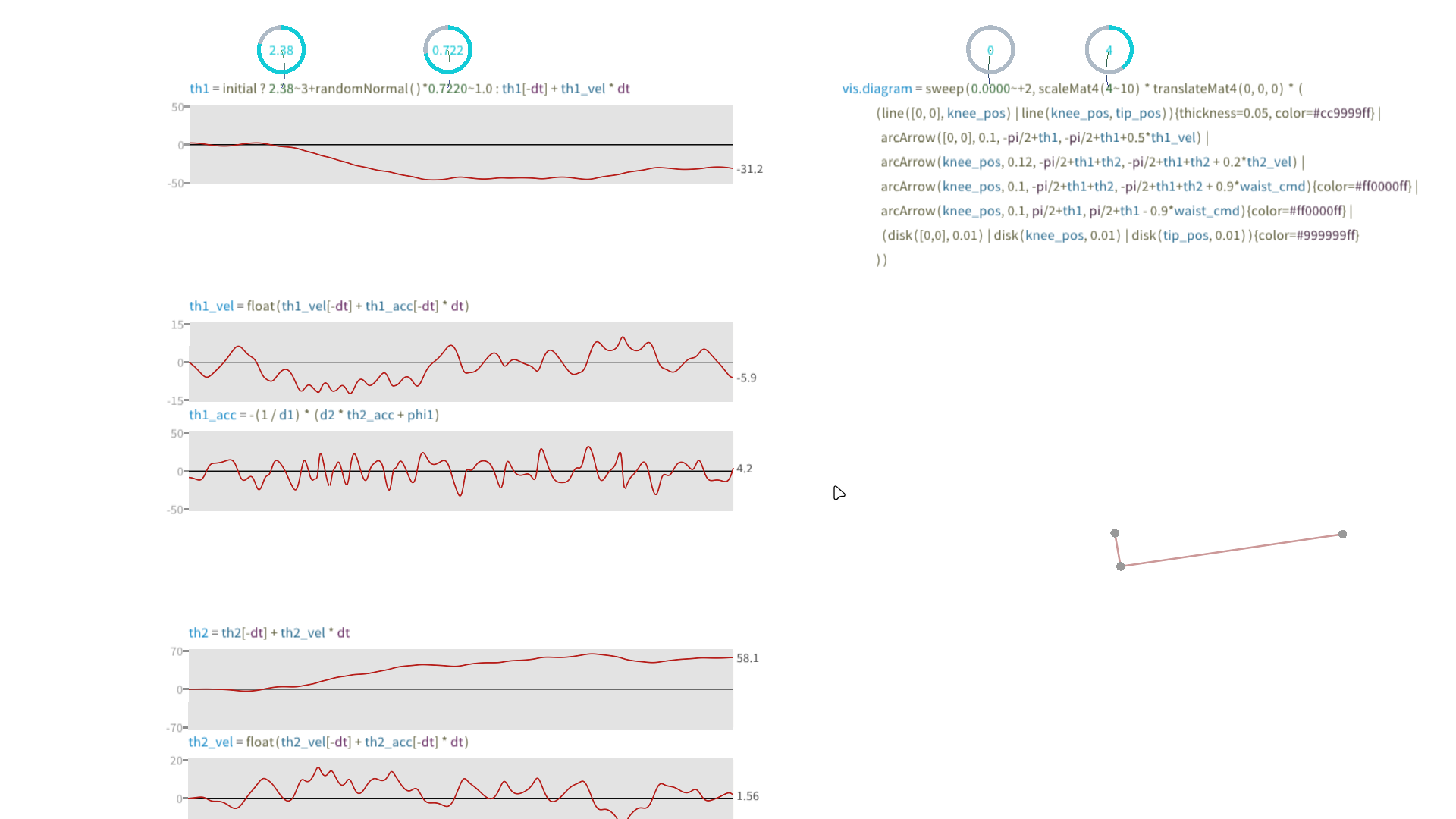

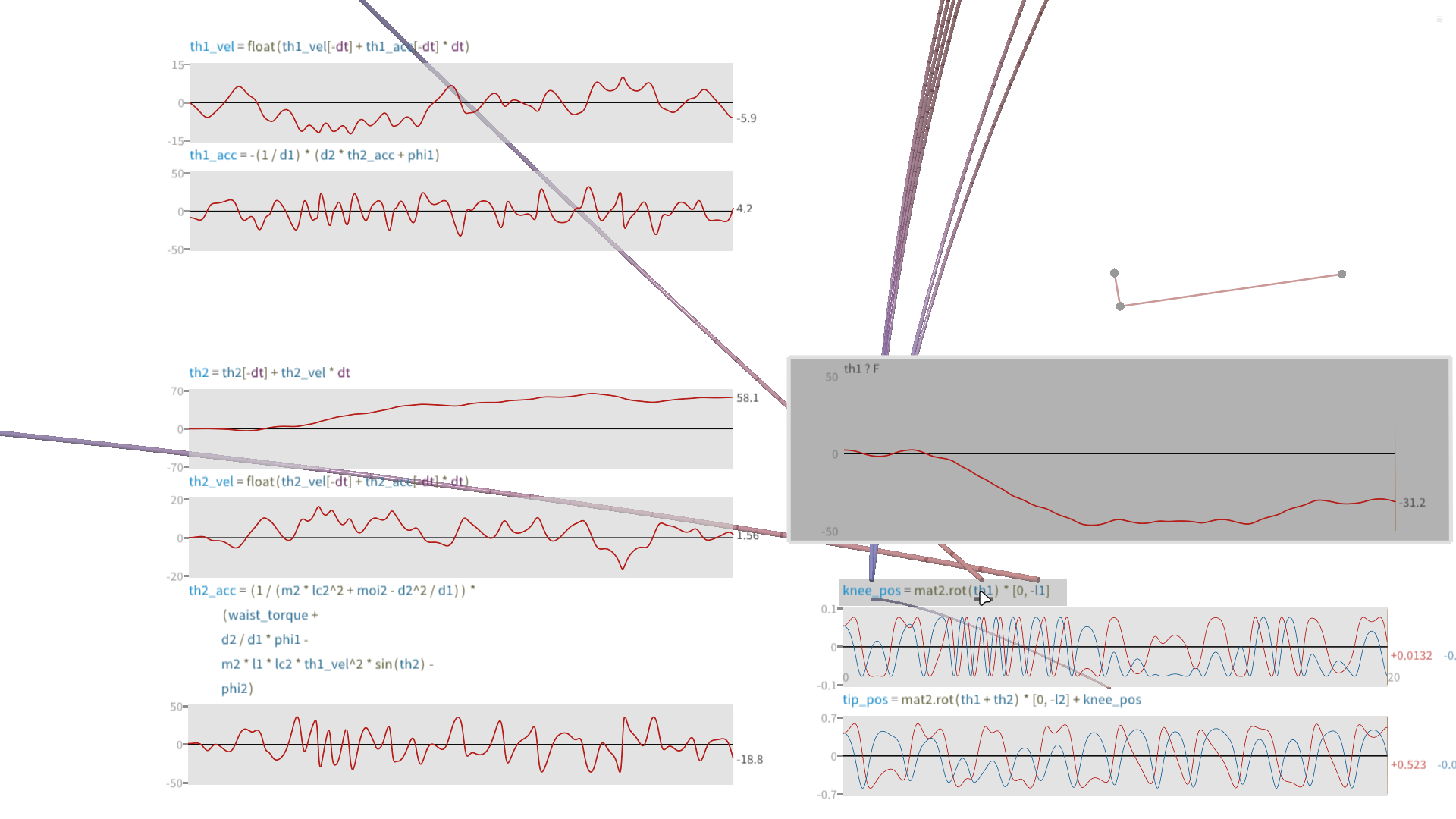

The most obvious facet of Throbol that sets it apart is that it utilises a 2d canvas to show data flow between nodes, which the documentations describes as an effect of being a ’time-domain spreadsheet’. The killer feature of this is immediately apparent when one mouses over any of the functions or blocks: you can see not only the source function, but a real-time plot of the function’s output!

But it gets better, you can edit the canvas while it’s running! Just click on a function, make your changes and see your changes instantly! This is such a powerful demonstration of interactive development, completely different to the write-compile-test loop that I am used to from C++.

Whybol?

This approach of being visualisation-first allows for one to immediately answer the question “Why is it doing that?”. If you’re from the software world, you’ll know that most of the time this question is answered through strategic use of “print” statements. If you’re a bit more experienced (Or very stuck) you might dust off GDB and begin poking around. In robotics though, this is slightly harder: I can say from experience that attempting to debug a misbehaving robot over a spotty SSH connection in the middle of a literal desert is uniquely awful.

So why didn’t you immediately port KIWI over?

For the first 20-30 mins, I was absolutely in love with what I was seeing. I started to toy around with it, wanting to begin solving some control problems. I cloned the repo locally, built it, and started piecing together how one should use Throbol.

This effort slowed once I saw the acrobot example, where it was revealed that one is supposed to use YAML to describe the system. My first reaction was a definite “I don’t like it”, and this feeling grows the more I think about attempting to model a large interconnected system. It feels like this wouldn’t scale gracefully at all with complexity. I cannot imagine how you would integrate Throbol with a system trying to do SLAM or similar. I don’t think Throbol has been written with scalability in mind, instead existing as a demonstration of what we could have if we thought about observability foremost. If I had the time, I would absolutely love to develop a small hobby robot through Throbol.

Obstacles, Observability and ORNIS.

I have been thinking about different approaches to assisting with introspecting robotic systems for a few years now. An early attempt of mine was ORNIS, the goal of which was simple: I wanted to see the state of ROS at a glance, and interact with it with as little friction as possible. At the time, I did (and still do) resent the idea that in order to list my nodes, topics, services etc I had to type out ros2 *verb* list and figure it out from the wall of text. God help you if you wanted to plot a JointStates topic, you’re going to need to open RQT, find the topic, then figure out the index of the joint you want. Foxglove Studio has made some strides through being an easy to use, performant visualisation software, however it doesn’t quite address the root of the issue.

I am of the opinion that as long as you’re forced to created a publisher every time you want to inspect a variable, or worse a std::cout, then you’re in an environment where introspection is a second class citizen. This is exacerbated when you’re attempting to debug a real robot in a real application environment, where you can’t just re-start the program, you need to re-create the failure case. This may involve having the robot re-perform a lengthy sequence of manoeuvres (After recompilation, and resetting of course), and then you pray the information to decided to capture actually informs you of the root cause. Why can’t I just ssh in and check which variable was the root cause?

Now, there are approaches one can take to mitigate having to perform these sort of tricks. One such approach is to develop an extensive enough logging suite. If the machine is automatically logging the data required to diagnose issues, you may be able to extrapolate the source of the issue from the logfiles, however this is only really useful for machines in the production stage of their lifecycle. For development, where you’re attempting to develop new capabilities, this likely won’t help you much. It’s hard to implement the scaffolding for logging for something that is not yet written.

A second approach may be extensive assertion and error handling in all of your nodes, every input is bound checked, same for every output. One may want to halt the system if the sensor readings aren’t right… I haven’t seen this provide benefit in practice yet. When your software is interacting with the environment, flexibility is king. More often than not, an erroneous encoder reading is not a software bug that should bring the whole system down, maybe your wheel is actually slipping. Whether wheel slip is noteworthy enough to begin logging for, for a “I have an abnormal reading, there may be a failure soon” approach is up for discussion. I once again think this solution is more suitable for when you’ve already had the robot accomplishing its task a few times, rather than during the initial prototyping stage.

LISP in <SPACE>?!

Around a similar time to my discovery of Throbol, I came across a post about LISP in space, which was an interview with a bloke named Ron Garrett. This also piqued my interest, as I had never before seen the terms LISP and Robotics share a horizontal axis. I’ve been slowly exposed to more and more of the world of LISPs, mostly due to my having used Emacs as my daily driver for 4 years at this point. Between us though, I have never used a LISP for anything other than extending Emacs (So far). Thus, the idea of using a LISP for robotics, which in my sphere is usually dominated by either C++ or Python was an eye-catcher.

At the time of the articles subject (80/90’s) it seems like the choices available for your languages would be a toss between C, Assembly, ADA or a Lisp of some sort. The constraints of the systems of the time largely influenced the choice of language used. In this case, they couldn’t run the LISP directly on the processor, so they used a custom compiler to generate the machine code required for the interpreter. At least to Ron the payoff was worth the effort, as the flexibility afforded by LISP allowed for (Apparently) order of magnitudes faster development times.

There is an infamous post, on REPL driven programming, which describes a few aspects of the apparent joys of developing in LISP. The one that spurred me into motion was his description of a breakloop, in which a LISP application will upon failure drop into a fully featured REPL environment. This allows one to interact with the running program, change variables, modify functions, etc. Coming from the world of GDB where getting non-standalone nodes into GDB can be an absolute nightmare, this strongly appeals to me.

A discussion with a friend

Circumstantially, I was having an afternoon sort-of hackathon with a very good friend of mine, Chris, where we got into conversation about observability. As it turns out, we had both become frustrated some aspects of programming. Chris has always seemed to live on the more computer science side of life than I do; where I seem to gravitate towards hydraulic control of (very) large machines, he appears to have gravitated towards areas such as elixir, reinforcement learning and high performance computing. Despite our completely different backgrounds, we had both experienced similar frustrations about the arcanes of computer programming.

A few years ago, Chris was discussing a language he was beginning to explore, known as elixir. On his recommendation, I gave it a brief try, wrote a few hello-worlds, and shelved it. While it seemed interesting, I was not yet unhappy enough with C++ to really dive in. Cut to a few weeks ago, I was in discussion with a friend of Chris, who was spinning up a start-up aiming to innovate in the world of robotics. Naturally the topic of ROS2 came up, on which he suggested that ROS isn’t a good solution to the problem of distributed processing. He described ROS2 as “Erlang done poorly”, which is a hell of a statement. It got me thinking though, and I can’t help but notice all of the signs pointing me to have a look at what else is out there.

Future

The point of all of this rambling, is that I would like to do some exploring. There are clearly some extremely interesting ideas being investigated outside of my realm of C++/Python. I am not a computer scientist, nor a software engineer, so before now I’ve never really been concerned with these things, C++ works well for me, I like it, but I’m ready for something different. I’m far from the first person to think about bridging the world of Elixir and Robotics, first evidence being Nerves, which allows for deploying Elixir on embedded devices, with native access to the GPIO’s. I also recently discovered rclex, which might make for a good stepping stone with my next project. It allows for creation of ROS2 nodes written entirely in Elixir, providing the ability to have these nodes interact with the rest of the ROS2 ecosystem normally. This means I would be able to receive all of the benefits from the maturity of ROS2 (Navigation2, Move-IT!, etc), while dipping my toes into the new language. Who knows, maybe the next entry in KIWI may be an exploration into Elixir? (It won’t, I’ve already started on it :(, but maybe the next NEXT one?).

Until next time…